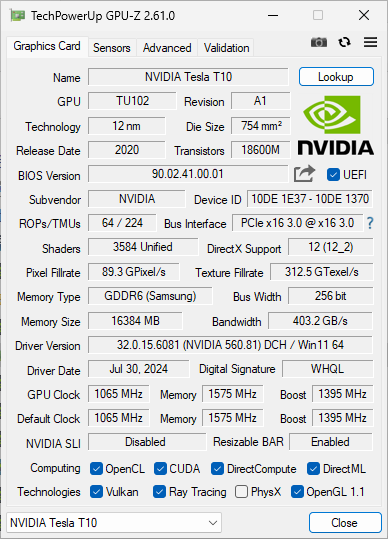

Recently, a special display card has emerged in the Chinese market during the Tesla T10 launch event — a GPU originally designed by NVIDIA exclusively for cloud gaming services, primarily used in the GeForce NOW cloud gaming platform. These retired display cards have now entered the secondary market, with current prices on Chinese platforms around 1,350 RMB (approximately 190 USD). Due to their affordability, I purchased two to examine their performance.

Deploying Prometheus monitoring system on Arista switches

Overview This article will explain how to run node_exporter and snmp_exporter via Docker containers on an Arista switch, and how to monitor switch status in real time using Prometheus.

- Cloud

- ...

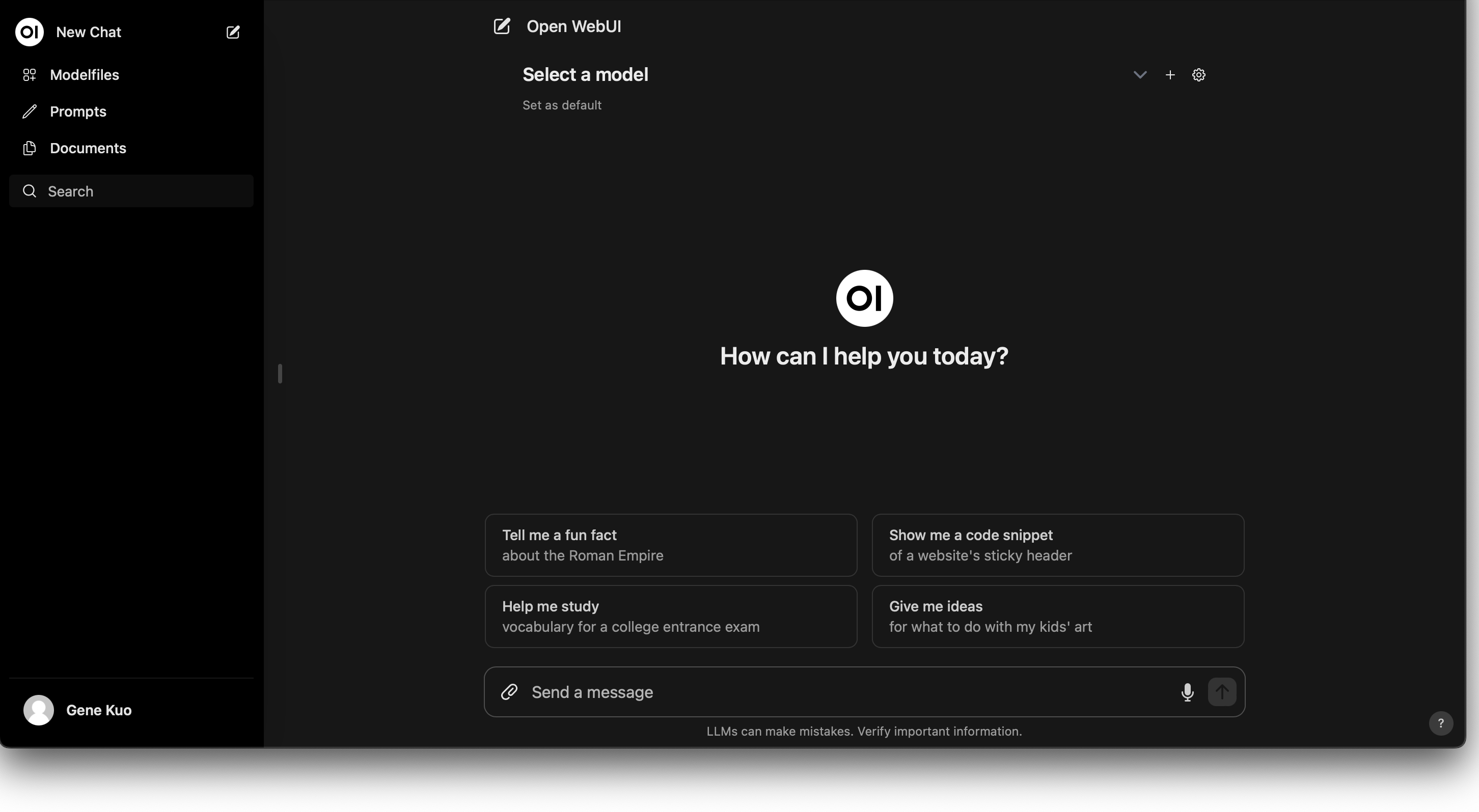

Leveraging Nvidia GPU on Kubernetes for LLM Chatbot Deployment

With the rapid advancement of artificial intelligence and large language models, more and more companies and developers are eager to integrate language models into their own chatbot systems (LLM Chatbot). This article aims to guide readers through deploying a high-performance LLM chatbot in a Kubernetes environment using Nvidia GPU, covering everything from essential installation and tools to detailed deployment steps.

How to Monitor Container PSI Metrics

Introduction In the previous article, we discussed PSI (Pressure Stall Information) and how to monitor system PSI metrics. This article will dive deeper into how to monitor PSI information for a single container.

Understanding and Applying Linux PSI (Pressure Stall Information) Metrics

Introduction When CPU, memory, or I/O resources experience contention, workloads may suffer from increased latency, degraded performance, and even face abrupt OOM (Out of Memory) termination. Without proper monitoring to detect such contention, users risk exhausting their hardware resources, leading to frequent crashes due to over-provisioning. Since Linux kernel 4.20, the Linux kernel has introduced PSI (Pressure Stall Information), a metric that enables users to precisely understand how resource shortages impact overall system performance. This article will briefly explain PSI and how to interpret its data.

Deploying Charmed Kubernetes with OpenStack Integrator

Introduction Charmed Kubernetes is a Kubernetes deployment solution provided by Canonical, enabling deployment of Kubernetes across various environments through juju. This article will walk through deploying Charmed Kubernetes onto OpenStack, and leveraging the OpenStack Integrator to provide Persistent Volumes and Load Balancers to Kubernetes.

- Cloud

- ...

Quickstart Kubernetes Deployment: Using kops on OpenStack – Practical Guide

Kubernetes offers multiple deployment options, and among various tools, kops stands out for its ease of use and high integration. This article will provide an in-depth look at the kops tool, guiding readers through practical steps to rapidly set up a Kubernetes cluster in an OpenStack environment.

How to Select the Right Ceph SSD

With the continuous decline in SSD prices, many enthusiasts and enterprises are beginning to explore using Ceph to build storage pools based on SSDs, aiming for higher performance. However, to achieve optimal Ceph performance, selecting the right SSD is critical. In this article, we will explore how to choose SSDs that are well-suited for Ceph.

AMD GPU and Deep Learning: Practical Learning Guide

Historically, AMD GPUs have been considered less suitable for deep learning tasks, leading many deep learning users to prefer Nvidia GPUs. However, recently, LLMs (Large Language Models) have gained significant attention, and numerous research teams have released models based on LLaMA, prompting me to feel inspired and eager to experiment. I have several AMD GPUs with ample VRAM, so I’ve decided to test these cards for running LLMs.

- Cloud

- ...

Introduction to Kubernetes Cluster-API

Kubernetes has been developing for many years in the cloud-native world and has also evolved numerous specialized projects related to managing its lifecycle, such as Kops and Rancher. VMware, on the other hand, has launched a project named Cluster API to leverage Kubernetes' own capabilities for managing other Kubernetes clusters. This article will briefly introduce the Cluster API project.